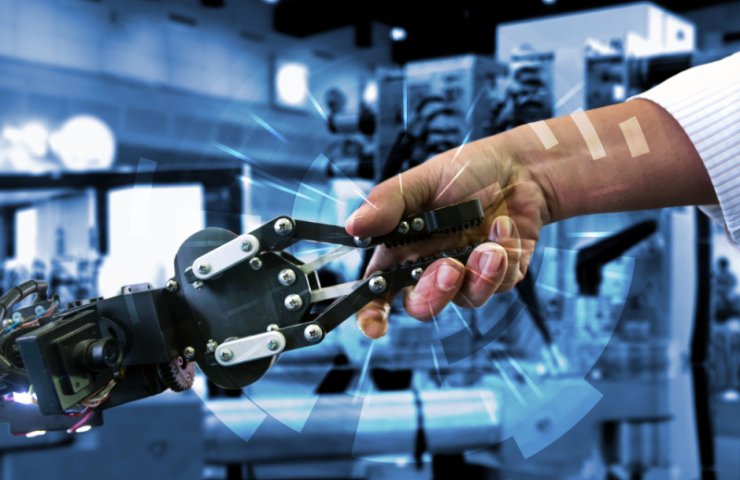

Twenty years ago, almost the entire robotics market was made up of industrial robots. These devices were used for welding, painting, or simple moving operations in factories and were always isolated from live employees. Today robots are starting to work side by side with humans and are entering everyday life. What technologies help robots to become human? Alexey Gonnochenko, head of Sber's Robotics Laboratory, will tell you about this.

Let's define the terminology: what devices can be considered robots?

Initially, a robot was understood as a device that can change the sequence of actions in accordance with the data it receives from the sensors. The first industrial robots appeared back in the mid-50s in the United States. These were numerically controlled manipulators that automated simple technological tasks in mass production.

Since then, robots have become much more complex, and the word itself, at least in everyday life, has ceased to be so unambiguous. A robot vacuum cleaner is called a robot, and for some reason the washing machine is not, although it is also equipped with a bunch of sensors and is able to choose the washing modes itself. An unmanned vehicle, of course, is also a robot, but this definition has not stuck to it either.

What important things have robots learned in recent years?

In principle, from the very beginning, everyone dreamed of an ideal assistant robot that would wipe the dust and walk the dogs. But in reality, even twenty years ago, the place of the robot was in a closed cage, where it will not harm anyone, but will do something useful. In fact, 95% of robotics then concentrated on manufacturing, and the access of people to the robot's working area was severely limited. Robots were, albeit smart machines, but dangerous: they did not have a sufficient number of sensors to control the surrounding space, then it was considered redundant.

But ten to fifteen years ago, the so-called collaborative robotic arms appeared, equipped with additional sensors that allowed them to work relatively safely next to a person. Now we understand that this was a breakthrough that significantly expanded the scope of robots. It became possible to use robots in an unprepared environment, and there was no need to create special conditions for them. Plus, the programming interface has been greatly simplified: it can be configured by any non-engineer after completing an hour of training. This gave impetus to the automation of processes that no one had previously automated in principle.

Tell us about the technologies that made this happen.

It is collaborative manipulators that do not require any special technologies, just the market and the general development of technologies have prepared the ground. If we talk about more complex examples, such as a robot vacuum cleaner or an unmanned vehicle, then all this became possible due to a significant increase in the computing power of computers. There are compact and affordable, yet powerful computers that can be placed on board a robot of any size.

To collect information about the surrounding space, the robot needs vision: a camera or some other sensors. The data from these sensors needs to be processed. This requires processing power. The more data and the more complex it is to process on board, the more power the robot needs.

In fact, it is difficult for a person to understand why robots cannot cope with some tasks. We calmly deal with unfamiliar subjects, learn to interact with new tools. But we forget that for the first ten to fifteen years of life, we continuously learn this skill. A modern robot does not have such mechanisms for accumulating experience. He can be programmed to work with one subject, but he cannot transfer his skills to another subject of a similar class or aggregate his experience with different things in the past. The same goes for movement in space. When we move through an office or street, we subconsciously control the trajectories of all objects that we see, so as to build our path and avoid collisions. We do not think about how much information we are processing at this moment.

Intel® NUC is one such platform that can be used to create robots that perform deep processing of sensory data.

What Intel products do you use in the lab?

We use Intel NUC computers. These are usually the most productive models. In research, it is always easier to take the older model so as not to waste time on code optimization. Serious optimization in the early stages of algorithm research is a waste of time. When it comes to mass production, reliability becomes important: the computer that underlies the robot must work 24/7 without shutting down for five years.

We use Intel RealSense ™ deep vision technology as one of the robot's "vision" sensors. IN